Want to integrate generative AI to give your business a competitive edge? Many startups and SMBs struggle to understand AI models and where to start if they want to build one. Without a clear roadmap, efforts to build and scale generative AI may feel out of reach.

The good news: Python’s extensive library ecosystem makes it a powerful choice for working with generative AI. While the field is vast, this article provides a high-level view of key factors —such as model selection, deployment options, and data preparation—that can help you direct this space. By 2030, the generative AI market is set to reach $109.37 billion, a sign of vast growth potential for those who adopt it. The costs of AI development are pivotal for businesses that seek to capitalize on this opportunity. Here, we outline foundational steps and considerations to show how Python can open doors to AI-powered solutions for your business.

What Are Some Popular Python Libraries for Generative AI

A carefully selected generative model allows companies to reach specific goals, boost productivity, and engage customers successfully. Custom generative AI development services guarantee that the chosen model aligns closely with unique business needs. Here is an overview of popular generation models, along with guidance to help you select the right one for your business application:

- TensorFlow is a widely adopted library developed by Google. Known for its flexibility and scalability, TensorFlow supports deep learning and includes tools like TensorFlow GANs to create generative adversarial networks (GANs). This library suits applications in image and text generation and offers pre-built components that accelerate development.

- PyTorch, maintained by Meta, appeals to researchers and developers due to its simple, flexible design. Its support for dynamic computation graphs enables productive experimentation and prototype development. Libraries like PyTorch Lightning offer fast and easy tools for generative models like GANs and transformers, which are used in both research and industry.

- Hugging Face Transformers provides key tools for natural language processing. This library includes various pre-trained transformer models, such as BERT and GPT, which developers use to set up advanced language models seamlessly. The active community and clear documentation make Hugging Face accessible to newcomers in generative AI. Developers often rely on this library to create high-performing, responsive systems in tasks like text generation, sentiment analysis, and conversational AI, and achieve results quickly and successfully.

- Keras, originally a standalone library and now an API within TensorFlow, is known for its simplicity. Keras supports rapid model development, which proves useful for teams that must deploy models without complex setups.

Each of these libraries brings unique strengths and provides businesses with a range of options to build and deploy powerful generative AI solutions.

4 Key Steps to Build Generative AI with Python

A generative AI model requires several fundamental steps to come together. From data preparation to output generation, this roadmap outlines the steps needed to build resilient generative AI solutions in Python.

Step 1: Acquire and Prepare Quality Data

The quality of data plays a pivotal role in the success of generative models. Accurate, relevant data enables the model to learn complex patterns and generate realistic outputs, while poor data quality can introduce noise and bias, leading to unreliable results.

Data Collection: Begin to gather a large and diverse dataset that matches the type of content needed. Use methods such as web data extraction, access to open datasets, or proprietary business data sources. Web data extraction provides specific data types from selected websites, while open datasets offer accessible collections across fields like images, text, and audio. The use of a large number of different sources gives the dataset a wider range, which helps the model to grasp things better and produce more accurate results.

Data Cleaning: After you collect the data, remove any noise, irrelevant entries, or inconsistencies that may interfere with model performance. Noise often includes incomplete or duplicate records, which lower data quality. Eliminate these elements to create a refined dataset focused on relevant information. For example, in an image dataset, exclude images that lack clarity to guarantee the model learns from high-quality examples.

Data Preparation: Format the data to meet the model’s requirements. Typical transformations include adjustments to image sizes to maintain uniformity, defining pixel values within a standard range, and conversion of images into numerical arrays. For example, apply a consistent scale for pixel values to improve uniformity, which allows the model to interpret images accurately. These steps establish a reliable data foundation that enables the model to identify patterns and produce high-quality results.

Step 2: Choose a Generative Model Architecture

The choice of the right model architecture is important to generate content that fits your specific needs. For companies that require customized solutions, custom generative AI development services can guarantee that the chosen architecture aligns with business goals. Below are three widely used architectures:

Generative Adversarial Networks (GANs): GANs use two neural networks—a generator and a discriminator. The generator creates new data, while the discriminator checks its authenticity. This iterative process improves the generator’s ability to produce outputs that closely match real data. GANs are highly efficient for tasks such as image creation, video synthesis, and generating high-quality visual content.

Example: Simple GAN Implementation Using TensorFlow

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, LeakyReLU, Reshape, Flatten

# Define the generator model

def build_generator():

model = Sequential()

model.add(Dense(128, input_dim=100))

model.add(LeakyReLU(alpha=0.2))

model.add(Dense(256))

model.add(LeakyReLU(alpha=0.2))

model.add(Dense(512))

model.add(LeakyReLU(alpha=0.2))

model.add(Dense(28 * 28, activation='tanh'))

model.add(Reshape((28, 28)))

return model

# Define the discriminator model

def build_discriminator():

model = Sequential()

model.add(Flatten(input_shape=(28, 28)))

model.add(Dense(512))

model.add(LeakyReLU(alpha=0.2))

model.add(Dense(256))

model.add(LeakyReLU(alpha=0.2))

model.add(Dense(1, activation='sigmoid'))

return model

# Build and compile models

generator = build_generator()

discriminator = build_discriminator()

discriminator.compile(optimizer='adam', loss='binary_crossentropy')

# Display model summaries

generator.summary()

discriminator.summary()

Variational Autoencoders (VAEs): VAEs transform input data into a compact latent space and then decode it to produce new outputs. This architecture suits tasks that require dimensionality reduction and data reconstruction, such as image generation, anomaly detection, or data quality improvement. VAEs successfully capture complex patterns within the data.

Transformer-Based Models: Originally developed for natural language tasks, transformers now serve a wide range of generative applications, such as text generation, image synthesis, and code completion. These models rely on self-attention mechanisms to process sequential data efficiently, which makes them well-suited to produce coherent text, natural dialogue, or other creative outputs based on context.

Example: Text Generation Using Hugging Face Transformers

from transformers import GPT2LMHeadModel, GPT2Tokenizer

import torch

# Load pre-trained GPT-2 model and tokenizer

model_name = 'gpt2'

model = GPT2LMHeadModel.from_pretrained(model_name)

tokenizer = GPT2Tokenizer.from_pretrained(model_name)

# Generate text

input_text = "The future of AI is"

input_ids = tokenizer.encode(input_text, return_tensors='pt')

# Generate output text

output = model.generate(input_ids, max_length=50, num_return_sequences=1)

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

print("Generated Text:\n", generated_text)

Step 3: Train Your Model

A generative model requires a structured approach to reach optimal performance. Python libraries like TensorFlow and PyTorch offer powerful tools to define the architecture of your neural network. These tools let you specify the number of layers, neurons, and activation functions to match your project’s needs.

- Define the Architecture and Set Parameters

To start, set up the model’s structure by selecting the right architecture. Choose the number of layers, specify neurons per layer, and select activation functions based on the type of content you aim to generate. For instance, ReLU (Rectified Linear Unit) is commonly used in hidden layers, while softmax is ideal for classification tasks.

- Configure the Model with a Loss Function and Optimizer

A key step is to define a suitable loss function that measures how far the model’s predictions deviate from the actual outputs. For GANs, binary cross-entropy is often used, while VAEs may use mean squared error (MSE) or Kullback-Leibler divergence. Pair the loss function with an optimizer like Adam or SGD (Stochastic Gradient Descent) to adjust model parameters efficiently.

Example of Configuring a Model with TensorFlow:

import tensorflow as tf

model = tf.keras.Sequential([

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(256, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

- Feed the Data and Train the Model

After you set up the architecture, load the prepared data into the model. Divide your dataset into train and validation sets to track performance and avoid overfitting. Use backpropagation to adjust the model’s weights based on the error rate. This step refines the model and helps it recognize key patterns.

- Adjust Hyperparameters for Optimization

Test different hyperparameters, such as learning rate, batch size, and epochs, to optimize model performance. Use a moderate learning rate (e.g., 0.001) and modify it based on results. A larger batch size can boost speed but may reduce accuracy, while a smaller batch size can improve accuracy but take more time.

- Validate and Evaluate Model Performance

After you train the model, assess it with a validation dataset to measure accuracy and generalization. Rely on metrics such as accuracy, precision, and recall to evaluate performance. Adjust the model based on these results to improve accuracy and reliability.

Example of Model Evaluation in TensorFlow:

loss, accuracy = model.evaluate(validation_data)

print(f'Validation Accuracy: {accuracy:.2f}')

These steps create a strong foundation to train a generative model, which helps it produce accurate and high-quality outputs that match your specific goals.

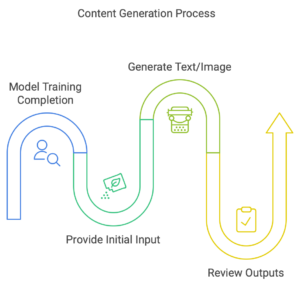

Step 4: Generate New Content

Once the model completes training, you can generate new content. Provide an initial input, often called a seed, to guide the model in creating outputs that match your objectives. A seed may include a text prompt, a partial image, or a particular keyword. The seed plays a key role in shaping the results, as the model uses it to produce coherent and contextually accurate outputs.

To generate text, start with a phrase or sentence that reflects the desired topic or tone. For instance, if you need a product description, include keywords related to product features. The model then uses this input along with patterns it learned during training to produce meaningful content.

For image creation, provide a partial image or a simple sketch. This approach allows models like GANs or VAEs to add details and produce high-quality visuals. These models excel at generating images that fit specific styles or themes.

Transformer-based models like GPT-3 or BERT help businesses automate content creation, write personalized responses, and generate marketing copy. Adjust the initial input and parameters to control the tone, complexity, and length of the output. This flexibility allows you to adapt content to fit your particular needs.

After you generate content, review the outputs to confirm they meet your quality standards. This final step guarantees consistency and accuracy, especially for customer-facing applications.

Final Words

Generative AI offers new opportunities for businesses to innovate and improve their operations. Python, with its rich set of libraries, helps companies develop models that generate text, images, or full datasets suited to their needs. However, success requires a firm grasp of key steps, such as data preparation and selection of the appropriate model architecture.

Tools like TensorFlow, PyTorch, and Hugging Face Transformers simplify model training and deployment. These resources enable teams to optimize performance and refine outputs. High-quality data remains decisive; without it, even advanced models may not provide valuable results.

Businesses must refine models to align them with specific objectives. Adjust hyperparameters, modify model architectures, and evaluate outputs to confirm the AI delivers accurate and relevant content. These approaches open up new possibilities and offer an advantage over competitors.

Devote time to grasp the fundamentals of generative AI to improve results and support business growth.