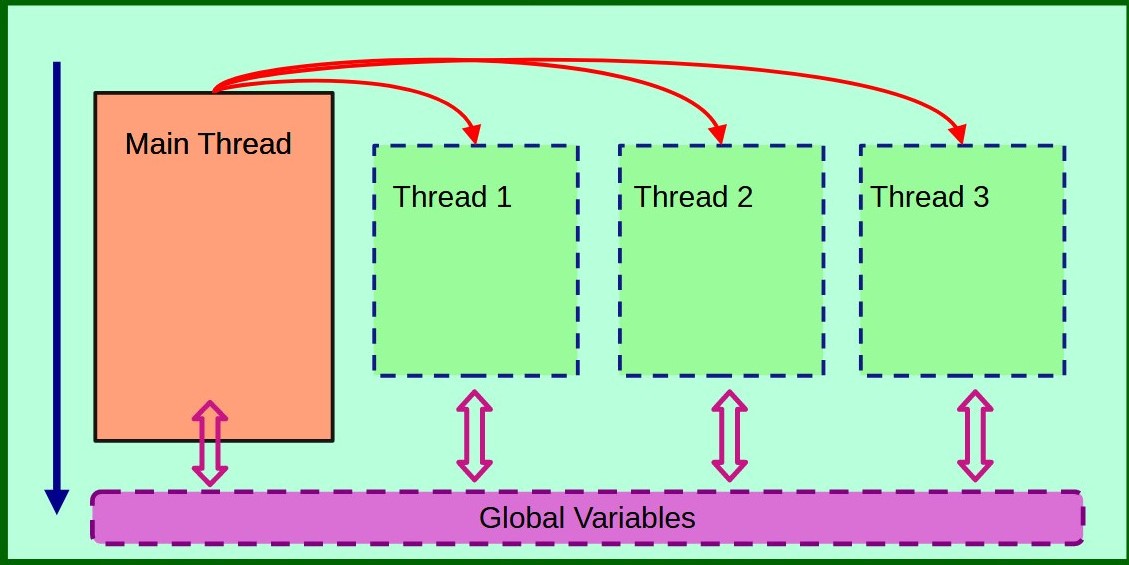

Threading allows multiple threads of execution to run concurrently within a single program, enabling more efficient use of system resources and improved performance for I/O-bound and certain computational tasks.

Basic Threading Concepts

Thread Creation

import threading import time # Basic thread definition def worker_function(name): """ Simple thread function demonstrating basic executionArgs:name (str): Identifier for the thread"""print(f"Thread {name} starting")time.sleep(2) # Simulate workprint(f"Thread {name} finished")# Create and start threadsdef create_basic_threads():"""Demonstrates thread creation and management"""# Create thread objectsthread1 = threading.Thread(target=worker_function, args=("A",))thread2 = threading.Thread(target=worker_function, args=("B",))# Start thread executionthread1.start()thread2.start()# Wait for threads to completethread1.join()thread2.join()

Thread Creation example demonstrates the basics of creating and managing threads in Python. A functionworker_function is defined to simulate work by printing a start message, pausing for 2 seconds, and then printing a completion message. Two threads are created using threading.Thread, with the function passed as the target and arguments supplied via args. The threads are started with start() and synchronized using join(), ensuring the main program waits for both threads to finish execution before proceeding.Thread Synchronization

# Shared resource protectionclass ThreadSafeCounter:"""Thread-safe counter demonstrating synchronization"""def __init__(self):self._value = 0self._lock = threading.Lock()def increment(self):"""Thread-safe increment operation"""with self._lock:self._value += 1def get_value(self):"""Thread-safe value retrieval"""with self._lock:return self._valuedef concurrent_increment():"""Demonstrate thread-safe counter incrementation"""counter = ThreadSafeCounter()threads = []# Create multiple threads incrementing shared counterfor _ in range(10):thread = threading.Thread(target=lambda:[counter.increment() for _ in range(1000)])threads.append(thread)thread.start()# Wait for all threads to completefor thread in threads:thread.join()print(f"Final counter value: {counter.get_value()}")

Thread Synchronization example illustrates how to safely manage shared resources in a multithreaded environment using a ThreadSafeCounter class. The counter's operations are synchronized using a threading.Lock, ensuring that only one thread can access or modify the counter at a time. Multiple threads increment the counter in parallel, but the use of locks prevents race conditions. After all threads complete, the final value of the counter is printed, demonstrating the effectiveness of thread-safe operations.The ThreadSafeCounter class implements a thread-safe counter using Python's threading.Lock(). This is crucial because without synchronization, multiple threads trying to increment the counter simultaneously could lead to race conditions - where the final value becomes unpredictable due to threads interfering with each other's operations. Think of it like multiple people trying to update a single bank account balance at the same time - without proper synchronization, transactions could be lost or corrupted.

In the concurrent_increment() function, we create 10 separate threads, each attempting to increment the counter 1000 times. This creates significant potential for race conditions, as there are 10,000 total increment operations happening concurrently. However, because we use the with self._lock context manager in our increment method, Python ensures that only one thread can execute the increment operation at a time. Any other threads trying to increment while the lock is held must wait their turn. This is why when we print the final counter value, it will always be 10,000 - exactly what we expect from 10 threads each incrementing 1000 times. Without the lock, we might get unpredictable results that are less than 10,000 due to lost updates in race conditions.

Advanced Threading Techniques

Thread Pool Execution

from concurrent.futures import ThreadPoolExecutorimport requestsdef fetch_url(url):"""Concurrent URL fetching using thread poolArgs:url (str): Website to fetchReturns:str: Fetch result or error message"""try:response = requests.get(url, timeout=5)return f"Fetched {url}: {response.status_code}"except Exception as e:return f"Error fetching {url}: {e}"def concurrent_url_fetching():"""Demonstrate thread pool for parallel URL requests"""urls = ["https://www.python.org","https://www.github.com","https://www.stackoverflow.com"]# Use ThreadPoolExecutor for managed concurrent executionwith ThreadPoolExecutor(max_workers=3) as executor:results = list(executor.map(fetch_url, urls))for result in results:print(result)

This code demonstrates a common real-world use case for threading: making multiple HTTP requests concurrently. This is particularly useful because network I/O operations spend a lot of time waiting for responses, and threading allows us to make efficient use of that waiting time by starting other requests.

The ThreadPoolExecutor manager has a pool of worker threads, which offers several advantages over creating threads manually:

- Resource Management: By limiting the pool to 3 workers (

max_workers=3), we prevent thread explosion—creating too many threads, which could overwhelm system resources. The executor automatically queues additional tasks when all workers are busy. - Error Handling: The

fetch_urlfunction includes robust error handling with a try-catch block and timeout. If any request fails (due to network issues, invalid URLs, etc.), it won't crash the entire program but instead returns an error message that gets included in the results.

The executor.map() method is particularly clever - it takes a function and an iterable of arguments (our URLs), automatically distributes the work across the thread pool, and returns results in the same order as the input URLs. This is much cleaner than manually creating and managing threads for each URL. For example, if we have 100 URLs but only 3 workers, the executor will automatically process them in batches of 3, starting new tasks as previous ones complete.

This pattern is especially useful for applications like web scrapers, API clients, or any situation where you need to perform multiple independent I/O operations concurrently while maintaining control over system resources.

Event-Based Synchronization

def event_synchronization():"""Demonstrates thread coordination using Event"""# Shared event for thread signalingstart_event = threading.Event() def worker(event, name):"""Worker waiting for event signal"""print(f"{name} waiting for start signal")event.wait() # Block until event is setprint(f"{name} started after event")# Create threadsthreads = [threading.Thread(target=worker, args=(start_event, f"Thread-{i}"))for i in range(3)]# Start threadsfor thread in threads:thread.start()# Simulate preparation timetime.sleep(2)# Signal all waiting threadsstart_event.set()# Wait for all threadsfor thread in threads:thread.join()

Event-Based Synchronization example shows how to coordinate threads using the threading.Event class. An event object, start_event, acts as a signal for worker threads. Each thread waits for the event to be set before proceeding. The main thread simulates preparation time before signaling all worker threads to start by calling start_event.set(). This approach ensures that threads begin execution only when the necessary conditions are met, enabling controlled and coordinated multithreaded workflows.Best Practices

- Use Locks for Shared Resource Protection: When multiple threads access shared resources, use locks (e.g.,

threading.Lock) to ensure data integrity. Locks prevent race conditions by allowing only one thread to access a resource at a time, but use them judiciously to avoid performance bottlenecks. - Avoid Excessive Thread Creation: Creating too many threads can overwhelm the system and lead to resource exhaustion. Limit the number of threads based on your system's capabilities and the nature of the task to maintain stability and performance.

- Prefer Thread Pools for Managed Concurrency: Instead of manually managing threads, use thread pools (e.g.,

concurrent.futures.ThreadPoolExecutor) to control the number of concurrent threads. Thread pools simplify resource management and improve efficiency by reusing threads. - Handle Exceptions in Threads: Ensure that exceptions within threads are caught and handled properly. Unhandled exceptions can cause threads to terminate prematurely, leading to incomplete tasks or undefined behavior.

- Use Appropriate Synchronization Mechanisms: Besides locks, other synchronization mechanisms like semaphores, condition variables, or events may be more suitable depending on the use case. Select the mechanism that best balances safety and performance for your application.

Common Pitfalls

- Global Interpreter Lock (GIL) Limitations: In CPython, the GIL prevents true parallel execution of threads in CPU-bound tasks. This can limit performance gains when using threading for computationally intensive operations.

- Deadlock Risks: Deadlocks occur when threads wait indefinitely for resources locked by each other. To avoid deadlocks, ensure a consistent locking order and consider using timeout mechanisms with locks.

- Race Conditions: When multiple threads access and modify shared data simultaneously, race conditions can lead to unpredictable results. Use synchronization tools to prevent such issues.

- Excessive Synchronization Overhead: Overusing locks or other synchronization methods can degrade performance. Optimize synchronization to strike a balance between thread safety and efficiency.

When to Use Threading

- I/O-Bound Tasks: Threading is ideal for tasks that spend significant time waiting for I/O operations, such as reading/writing files or interacting with databases.

- Concurrent Network Operations: Use threading for handling multiple simultaneous network requests, such as in web scraping or server-client applications.

- Parallel File Processing: When processing multiple files, threading can help perform operations like compression, reading, or writing concurrently.

- Responsive UI Applications: In GUI applications, threading allows background tasks to run without freezing the user interface, improving user experience.

Performance Considerations

- Thread Creation Has Overhead: Creating and managing threads involves system resources and time. Use thread pools to mitigate this overhead by reusing threads.

- GIL Limits True Parallel Execution: In Python's CPython implementation, threading is not suitable for CPU-bound tasks due to the GIL. Use multiprocessing for true parallelism in such cases.

- Consider Multiprocessing for CPU-Bound Tasks: For tasks requiring heavy computation, the multiprocessing module allows leveraging multiple CPU cores, bypassing the GIL.

- Profile and Benchmark Your Specific Use Case: Before implementing threading, analyze your application's performance requirements. Profile the code to identify bottlenecks and determine if threading or an alternative solution, such as asyncio or multiprocessing, is more appropriate.

Python threading provides powerful concurrency capabilities. Understanding its nuances enables efficient, responsive application design.

More Articles from Python Central